What happened? Why?#

Kafka is a distributed system. Our applications, connected to Kafka, have to handle many many failures:

- Errors related to this distributed nature: networking, latency, timeouts

- Errors related to Kafka: rebalances, broker failures or slowness

- Errors related to the data: format, structure, bad or unexpected value

Gosh, that's a lot of things that can go wrong! As developer of applications, it's our job to handle them properly. If we don't, the whole business might be impacted (ie: stopped).

Kafka exceptions: We've all been there#

SerializationException#

We have our nice Spring application or Kafka Streams application, up and running for days, crunching 1000s of records per minutes. Everything works fine, until it doesn't:

1Caused by: org.apache.kafka.common.errors.SerializationException: 2 3Error deserializing key/value for partition my-topic-1 at offset 42. 4If needed, please seek past the record to continue consumption.

Our consumer stumbled upon bad data and has no idea what to do. The format of the data does not match what we expected when we develop our app, what we have configured. eg: we setup the Avro deserializer, but we got JSON data, it cannot work!

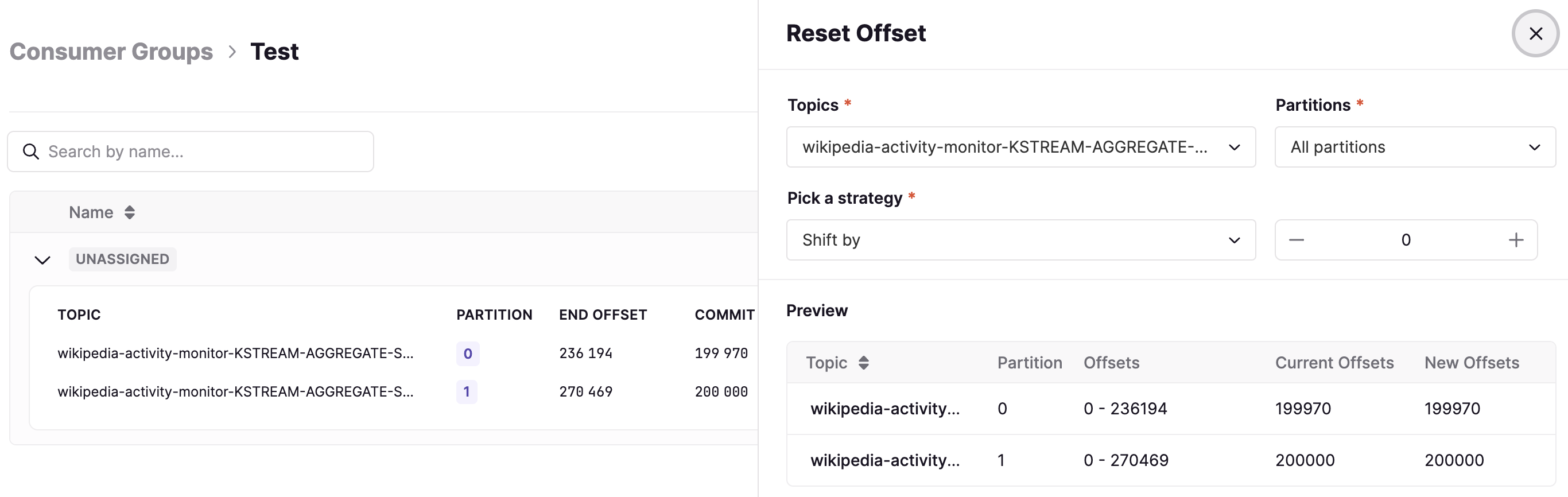

The advice from the error asks us to "jump" over the failing record to ignore it, this will continue consumption. How do we do that? What's the impact?

- Impact? Probably none, as the data can't be read anyway

- How? Tooling like Conduktor:

Other flavors of this error can be spotted in the wild, like with Kafka Connect connectors:

1Caused by: org.apache.kafka.connect.errors.DataException: 2Failed to deserialize data for topic my-topic to Avro:

Or my favorite: "Unknown magic byte!". Nothing magic here.

The data in Kafka does not adhere to the wire format that's expected. Put simply, the data you're trying to read was not encoded using the standard Confluent Schema Registry format. Either:

- the data are corrupted (probably not)

- the data were encoded using a different format (probably yes)

- another library/language is not using the proper binary encoding (probably yes)

1Caused by: org.apache.kafka.common.errors.SerializationException: 2 3Unknown magic byte!

The magic byte is a byte (8 bits) at the beginning of the data serialized using Confluent Schema Registry, which should be "0".

Unexpected data + your pipeline = ?#

- Wrong format

- Wrong or unknown schema

- Wrong ordering

- Wrong data

- Networking errors while processing

- Slow remote calls while processing (timeouts → rebalance)

All the things#

- Crash

Then What? Reset Consumer Group Offset? Who? When?

- Skip

Here be dragons. Business critical? Ordering?

- Reprocess

Force you way! Need a change? Who? What? When?

- Dead Letter Queue

Then what? Who? How?

Alerting: Detect Poison Pills (Lag)#

Reprocess Poison Pills#

Resolve your Dead Letter Queue#

Chaos: Are your applications ready for the real world?#

-

Very easy setup into your ecosystem

-

Transform unknowns into knowns

-

Deal with Kafka's oddities

-

Prevent downtime

-

poison pills?

-

broken brokers?

-

slow topics?

-

idempotence?

$ curl -X POST http://conduktor-chaos/my-topic -d'{ "type": "RANDOM_AVRO" }'

$ curl -X POST http://conduktor-chaos/my-topic -d'{ "type": "RANDOM_SCHEMA_ID" }'

$ curl -X POST http://conduktor-chaos/my-topic -d'{ "type": "INSTABLE_LEADERS" }'

$ curl -X POST http://conduktor-chaos/my-topic -d'{ "type": "DUPLICATE_ALL_RECORDS" }'

We aim to accelerate Kafka projects delivery by making developers and organizations more efficient with Kafka.