Kafka Self-Service & Data Products for Financial Services

Turn Kafka into a governed data product platform. Expose, secure, and manage Kafka topics as reusable data products, accelerating developer autonomy while maintaining full auditability and control.

Different business units manage isolated clusters and topics without shared visibility. Data exists but isn't discoverable or reusable.

ACLs, schemas, and connectors are manually managed. Platform teams own every change while developers wait in ticket queues.

Shadow pipelines bypass GRC oversight. Missing lineage, consumer lag insights, and visibility into who consumes what.

Kafka is everywhere, but the data within it is not productized.

Typical state:

- No ownership model for data products

- Unclear who maintains schema versions

- No single source of truth for metadata

- Data exists but can't be found

Every Kafka operation requires a ticket:

- Topic creation: 3 days

- ACL update: 2 days

- Schema change: 1 week

- Developers route around governance

Risk and Compliance require strict oversight but lack:

- Unified audit trails

- Lineage tracking

- Access evidence for regulators

- Kafka is a compliance blind spot

Automated Provisioning

Policy-driven templates for topics, ACLs, and connectors. Developers self-serve within guardrails

Data Product Catalog

Register topics as reusable data products with ownership, lineage, and access contracts

Identity Integration

Azure AD, Okta, LDAP, or IAM. Group-based access and least-privilege roles enforced automatically

Consistent RBAC

Same governance across Confluent, MSK, and self-managed. One policy, every cluster

Terraform & GitOps

Version-controlled, auditable provisioning through your existing CI/CD pipeline

GRC Visibility

Policy violations, encryption coverage, and user activity. All in one dashboard for compliance teams

Policy Controls

Enforce naming conventions, partition limits, retention settings, and schema standards automatically

Approval Workflows

Embed approvals into CI/CD or ServiceNow. Manual review where needed, automation everywhere else

Ownership Model

Assign data product owners. Clear accountability for schema versions, access approvals, and data quality

Lineage Tracking

See producers, consumers, and compliance status per data product. Know who uses what and why

Operational Efficiency

Eliminate tickets through automated provisioning and lifecycle cleanup. From weeks to minutes

Developer Portal

Self-service UI for topic discovery, access requests, and resource management

How Data Products Work

From request to production in minutes, not weeks.

Platform team sets policies: naming, schemas, retention, access. Guardrails apply automatically

Topics become discoverable products with owners, schemas, and access metadata

Developers request access, provision resources, and manage schemas within policy bounds

Every change logged. Lineage tracked. Compliance evidence generated automatically

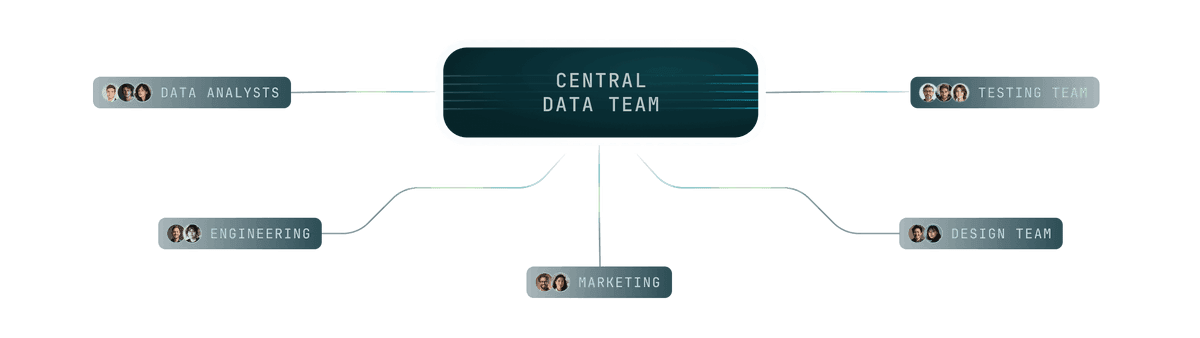

Data Product Enablement

Publish Kafka topics as certified products with schema contracts, ownership, and access controls

Developer Self-Service

Engineers request and manage topics, ACLs, and connectors with built-in approvals and audit

Hybrid Cloud Governance

Synchronize identity and schema validation across Confluent, MSK, and self-managed clusters

Compliance Automation

Generate auditable logs of every access, schema update, and data product change for regulators

Data Science Access

ML and analytics teams subscribe to curated, masked Kafka data products safely

Regulatory Sandboxes

Reuse governed data products in test environments without violating masking or retention policies

Read more customer stories

Frequently Asked Questions

What is a Kafka data product?

A data product is a Kafka topic packaged with ownership, schema, access metadata, and quality contracts. Teams discover and consume data products without understanding the underlying infrastructure.

How do you prevent teams from creating inconsistent resources?

Policy controls enforce naming conventions, partition limits, retention settings, and schema standards automatically. Teams can only create resources that comply with defined policies.

Can I still require approvals for production changes?

Yes. Conduktor supports configurable approval workflows. Require manual approval for production while allowing self-service for development environments.

How does this integrate with existing identity providers?

Conduktor integrates with Azure AD, Okta, LDAP, and IAM providers. Group memberships map to Kafka access. No separate credential management.

Does this work with multiple Kafka distributions?

Yes. Same governance layer across Confluent Cloud, AWS MSK, and self-managed Kafka. One policy applied everywhere.

Ready for self-service Kafka?

See how Conduktor enables developer autonomy without sacrificing governance. Our team can help you design the right self-service strategy for your organization.