Why Every Kafka Incident Ends with "Restart It"

Teams invest in Kafka observability thinking they're prepared. Then an incident hits, and they realize dashboards don't tell you which room is on fire.

Developers spend over 17 hours a week on debugging and maintenance. That's 42% of their working time, according to Stripe's Developer Coefficient report.

Nearly half the week spent not building, but fixing.

For teams running Kafka, the ratio is often worse, because when something breaks, most developers don't have the tools to actually understand what went wrong.

The observability trap

Every team running Kafka has the same story.

Adoption grows, more services depend on it, and leadership asks "what's our observability story?" So dashboards get built. Consumer lag charts, throughput graphs, alerts piped to Slack. Developers are told the observability story is handled. The metrics look good. The dashboards are green.

Everyone feels prepared.

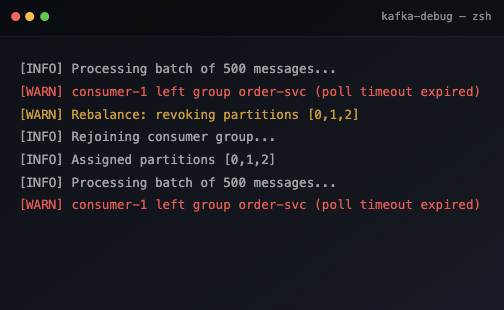

Then something breaks. The alert fires: consumer lag exceeds threshold. Someone pulls up Grafana. Yep, lag is high. The chart confirms what the alert already said.

Now what?

The dashboard shows the symptom, not the cause. Is it bad data? A stuck consumer? A schema mismatch? A partition assignment issue? The metrics can't tell you. They were never designed to.

So the team does what every team does: restart the consumer and hope it fixes itself. Sometimes it works. Sometimes it doesn't.

Either way, nobody actually knows what happened.

Alerts tell you the house is on fire

"Alerts tell you the house is on fire. They don't tell you which room."

This is the core problem with observability-first thinking.

Consumer lag is high

But which messages are stuck? What's in them? When did it start?

Throughput dropped

But what changed in the payloads? Which producers are affected?

Connector is unhealthy

But which task failed? What's the error? Where are the logs?

Schema compatibility failed

But what broke? Which consumers depend on it? What's the diff?

Observability tools are built to answer "is something wrong?" They're not built to answer "what is wrong?" or "where do I look?"

Most teams don't realize this until they're in the middle of an incident, staring at a dashboard that confirms the problem exists but offers nothing else.

The ad-hoc trap

Teams that feel this pain usually respond the same way: they build more tooling.

Custom scripts to consume from specific partitions. A Prometheus exporter someone wrote two years ago. A Grafana dashboard with twelve panels that made sense to the person who built it. These tools accumulate, and they sort of work, but they share the same blind spot.

Ad-hoc observability gives you snapshots. Not trends. Not history. Not answers.

- You can see that lag spiked. You can't see that it's been slowly climbing for three days.

- You can see a connector failed. You can't see the error message without SSH-ing into the worker node.

- You can see throughput dropped. You can't see what the messages actually contain.

The tooling tells you something is wrong. It doesn't help you fix it.

What debugging actually requires

Debugging isn't about metrics. It's about answering questions fast.

Here are six questions every developer should be able to answer in under 60 seconds during an incident:

- What was the last message produced to this topic?

- What's in the messages that are stuck?

- Which consumer group member is behind, and on which partition?

- What offset were we at yesterday at noon?

- What error is this connector task throwing?

- Is this schema compatible with what consumers expect?

These aren't exotic requirements. They're the first questions anyone asks when something breaks, and most teams can't answer them without writing code or digging through CLI output.

The capability to answer these questions is the foundation. Observability is the layer on top.

"If you can't debug, your prevention strategy is built on a blind spot."

Why the order matters

Prevention without debugging capability is a false sense of readiness.

You'll catch that something went wrong, but you won't know what. You'll alert the right people, but they won't be able to help. You'll have dashboards full of data, but none of it will answer the question that matters.

The teams that handle Kafka incidents well aren't the ones with the most dashboards. They're the ones who can look at the actual data and understand what's happening: messages, offsets, schemas, connector state.

Debug first. Prevent second.

Kafka debugging is a team sport. Join the conversation in the official Conduktor Community Slack.

Next up: Why Kafka debugging is stuck in 2010, and why most teams are still reaching for kafka-console-consumer when incidents hit.