Apache ActiveMQ, RabbitMQ, and Apache Kafka are generally considered messaging middleware. They integrate heterogeneous applications and make their interactions decoupled. But, Kafka goes beyond that definition and establishes itself as an event streaming platform. Although they have overlapping features, they have been designed to serve different use cases.

Understanding these differences beforehand will save you from using the wrong messaging middleware in your next project. This article explores each technology, compares and contrasts the differences, and concludes with intended use cases.

Technology Overview

What is Apache ActiveMQ?

Apache ActiveMQ is an open-source, multi-protocol message broker. Written primarily in Java, it enables applications to integrate across multiple messaging protocols, including AMQP, MQTT, STOMP, and JMS.

ActiveMQ is a message broker supporting point-to-point and publish-subscribe messaging semantics. Standard messaging constructs like queues and topics are available for various messaging use cases. ActiveMQ has a tight integration with Apache Camel to support Enterprise Integration Patterns (EIP) that helps integrate heterogeneous enterprise systems together. ActiveMQ leverages these patterns via Apache Camel routes deployed directly on the broker.

There are currently two "flavors" of ActiveMQ available - the well-known "classic" broker and the "next generation" broker code-named Artemis. Once Artemis reaches a sufficient level of feature parity with the "Classic" code-base, it will become the next major version of ActiveMQ.

What is RabbitMQ?

RabbitMQ is a message broker initially designed as a message queue or point-to-point communication model. Even though it is implemented based on AMQP protocol, it now supports multiple protocols, including MQTT. RabbitMQ can also support a publisher-subscriber model and scale by distributing queues across multiple nodes.

RabbitMQ’s primary messaging protocol, AMQP, comes with several features that make RabbitMQ a more versatile and flexible enterprise message broker. That includes broker-side message filtering and exchange bindings supporting many message routing strategies.

Written primarily in Erlang, RabbitMQ provides client implementations for many languages, including Java, .NET, and Erlang.

What is Apache Kafka?

Apache Kafka is a distributed event streaming platform that can ingest events from different source systems at scale and store them in a fault-tolerant distributed system called a Kafka cluster. A Kafka cluster is a collection of brokers who organize events into topics and store them durably for a configurable time.

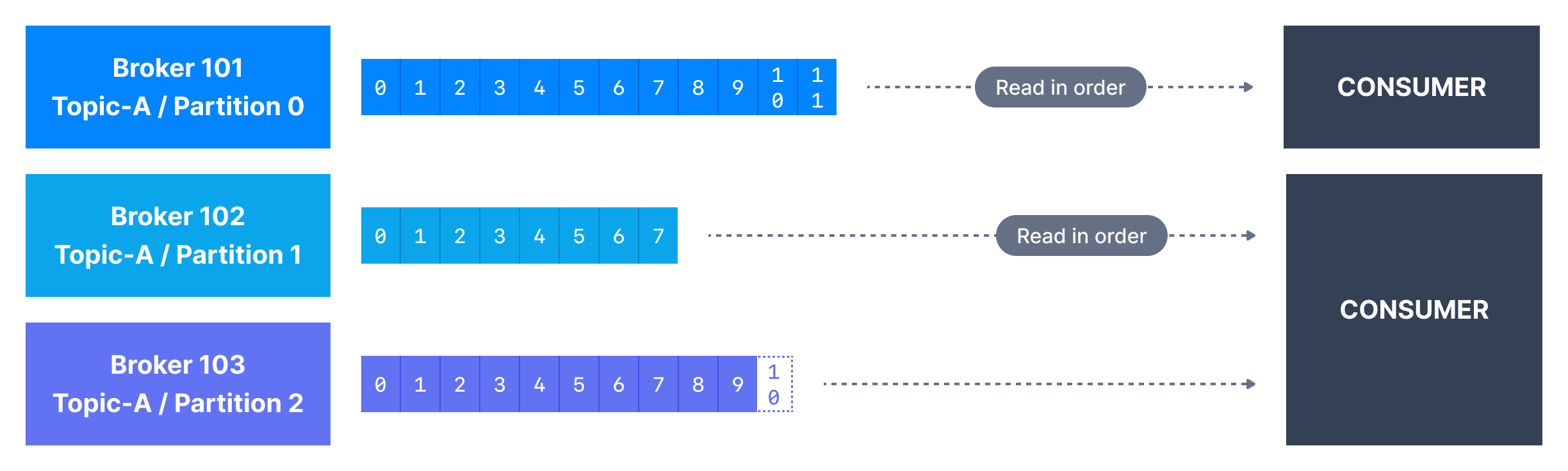

A Kafka topic is divided into several partitions. A partition holds a subset of events belonging to a topic. Incoming events are written to a partition sequentially, enabling Kafka to achieve a higher write throughput. Each partition is consumed by many consumers in parallel, with each consumer maintaining a unique view of the partition.

Message storage architecture and message consumption style make Kafka significantly different from typical message brokers. Also, Kafka doesn’t have the concept of queues. We will explore these differences further in the coming sections.

Apache Kafka vs RabbitMQ vs ActiveMQ

ActiveMQ, RabbitMQ, and Kafka are generally considered message brokers, enabling asynchronous and decoupled communication among applications. Although they have some overlapping features, fundamental differences exist in their architecture, message exchange patterns, and the intended use cases.

Enterprise messaging and event streaming are different concepts that we should understand before starting the comparison.

Understanding messaging vs. streaming

Commands and Events are two message exchanging constructs used in asynchronous communication.

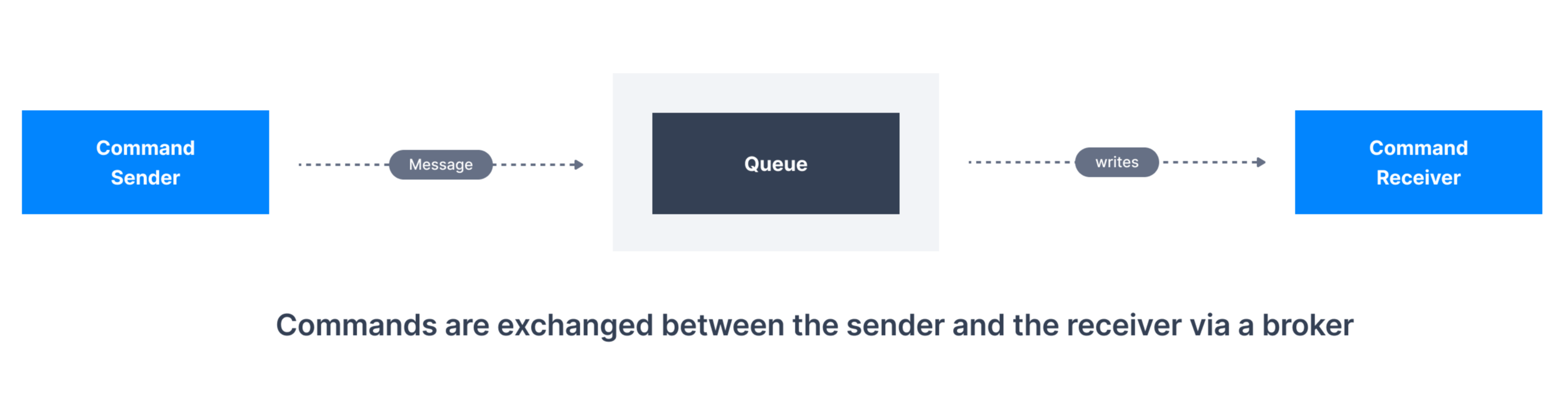

A Command represents a request to be processed. A Command has a sender and one receiver. The receiver must process the command and acknowledge the sender about the outcome, allowing the sender to expect a sense of guarantee. A loan application request is an example of a Command where the loan processor must respond to the receiver with the outcome.

Messaging is focused on exchanging Commands between senders and receivers with a strong delivery guarantee. Message queues and conversational messaging (RPC-style) are two messaging use cases.

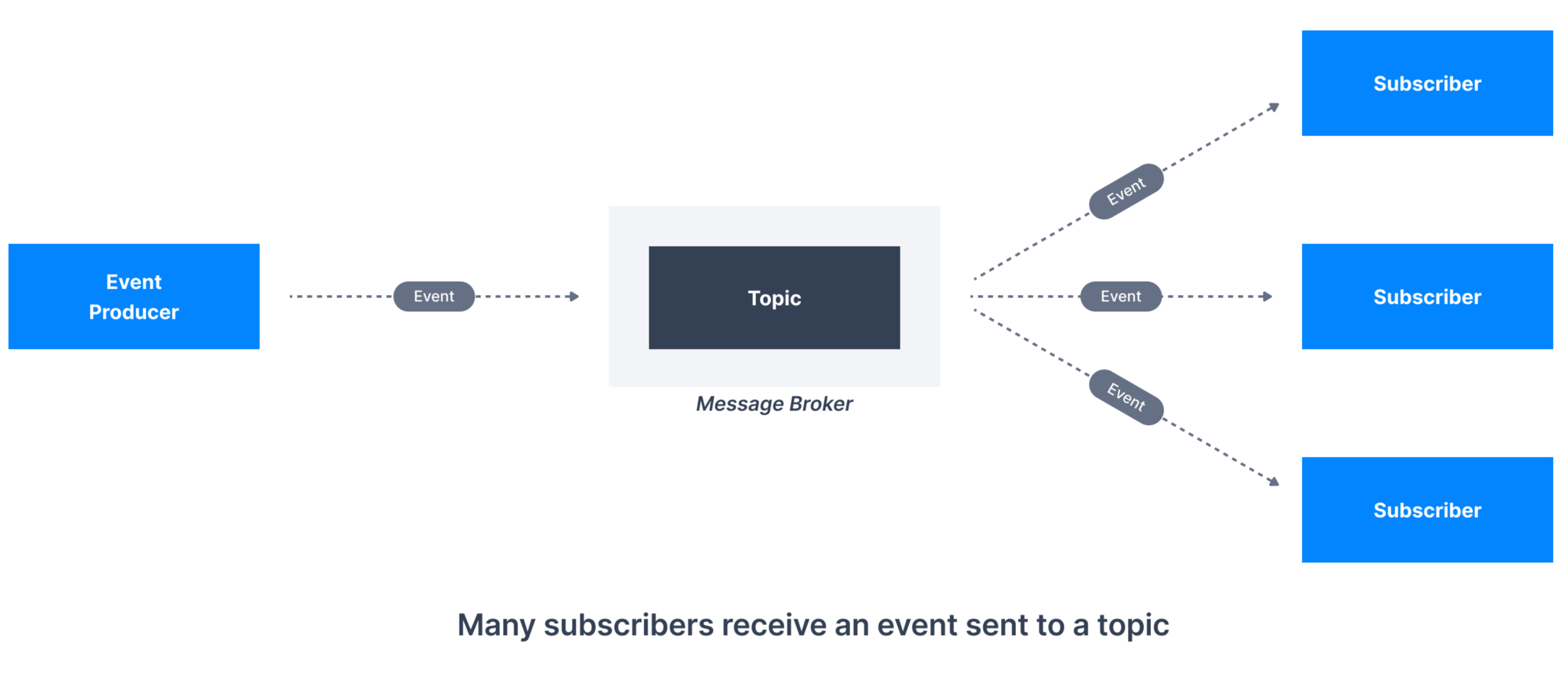

An Event represents a state change of an application. It is expressed in the past tense; it carries necessary information to notify receivers about the state transition (event carried state transfer). Unlike a Command, Events don’t expect a response in return and can be processed by many receivers. Events are the basis of publish-subscribe scenarios where a single event is received.

Streaming focuses on producing and consuming Events across different systems where delivery guarantees and acknowledgments are not top priorities.

ActiveMQ and RabbitMQ are good examples of enterprise messaging use cases where end-to-end delivery guarantees are expected, making them ideal for building transactional systems.

Apache Kafka is an event streaming platform capable of delivering high throughput, large volumes of events to subscribers in a publish-subscribe manner.

Knowing this key difference will help you decide on the right message broker that fits into your application use case.

Message retention

Message consumption is considered a destructive operation in message brokers such as ActiveMQ and RabbitMQ. Messages are transient by default and deleted from the broker after they are consumed and acknowledged by consumers. However, message persistence can be enabled with explicit configurations.

For example, RabbitMQ allows you to declare durable queues for long-term message retention.

Persistent messages are written to the disk as soon as they reach the queue. Transient messages (regular messages) are memory-mapped and flushed out to the disk upon reaching memory pressure. ActiveMQ also supports two persistence options; the default file journal is a disk-based append-only structure with great performance. The JDBC Store keeps messages in a JDBC-compliant database.

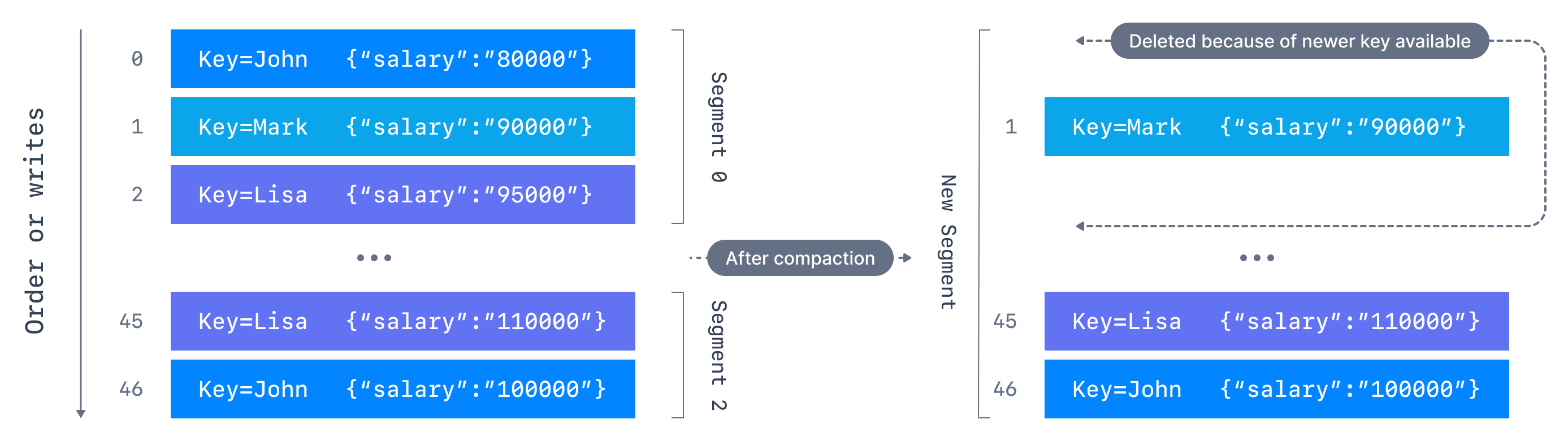

Conversely, messages written to Kafka are durable by default; they are not deleted after consumption. Kafka stores messages for a set amount of time and purges messages older than the retention period. That is controlled by the log.cleanup.policy, which defaults to delete where Kafka deletes messages older than the configured retention time. The default retention period is a week. When the policy is set to compact, Kafka only stores the most recent value for each key in the topic. You can find more information about Kafka message retention here.

The takeaway here is that messaging is transient in ActiveMQ and RabbitMQ as far as the performance is concerned. You can enable message persistence at the cost of disk I/Os. Meanwhile, Kafka has been designed to retain messages in the long term.

Message consumption - push vs. pull model

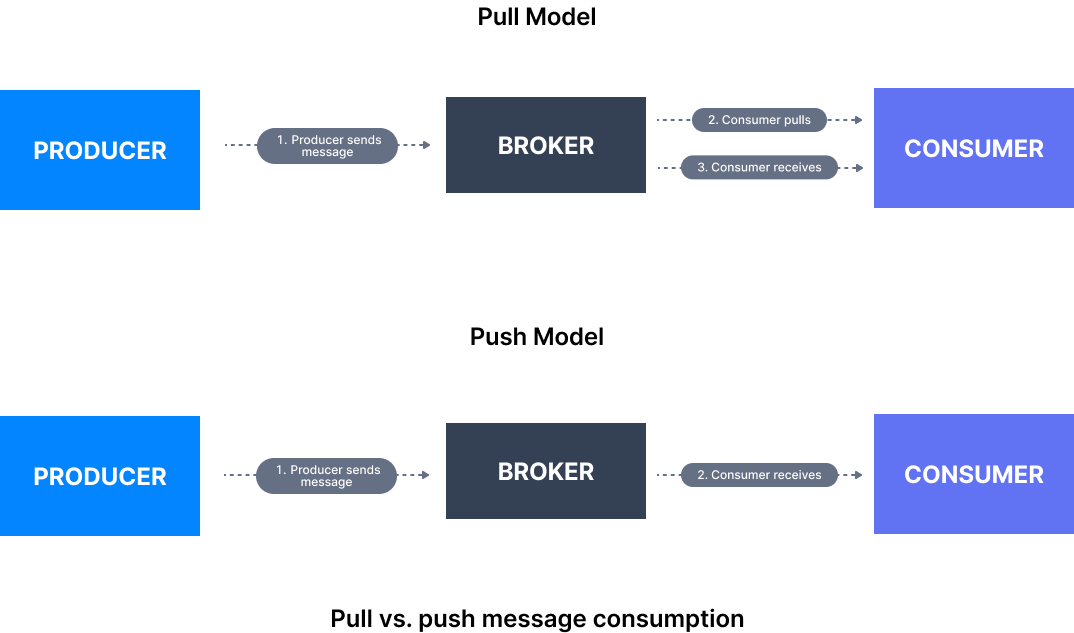

New messages are pushed or sent to consumers from the broker in the push model. It is the basis of the publish-subscribe pattern, where messages are pushed to all subscribers from the broker. The pull model periodically polls the broker for any new messages.

Typically, message brokers, including ActiveMQ and RabbitMQ, support both push-based and pull-based message consumption. For example, as a JMS-compliant message broker, ActiveMQ supports message queue and topic semantics, providing examples for the poll and push-based message consumption. RabbitMQ also supports both models with the work queues and publish-subscribe.

On the other hand, Kafka supports only pull-based message consumption. Messages in a Kafka partition have a monotonically increasing index called offsets. While consuming, a consumer always reads data from a lower offset to a higher offset and cannot read data backward. This implementation was made so that consumers can control the speed at which the topics are being consumed.

The consumer’s responsibility is to remember the offset of the last read message and commit it to Kafka so that it can resume reading next time.

Fine-grained subscriptions and message filtering

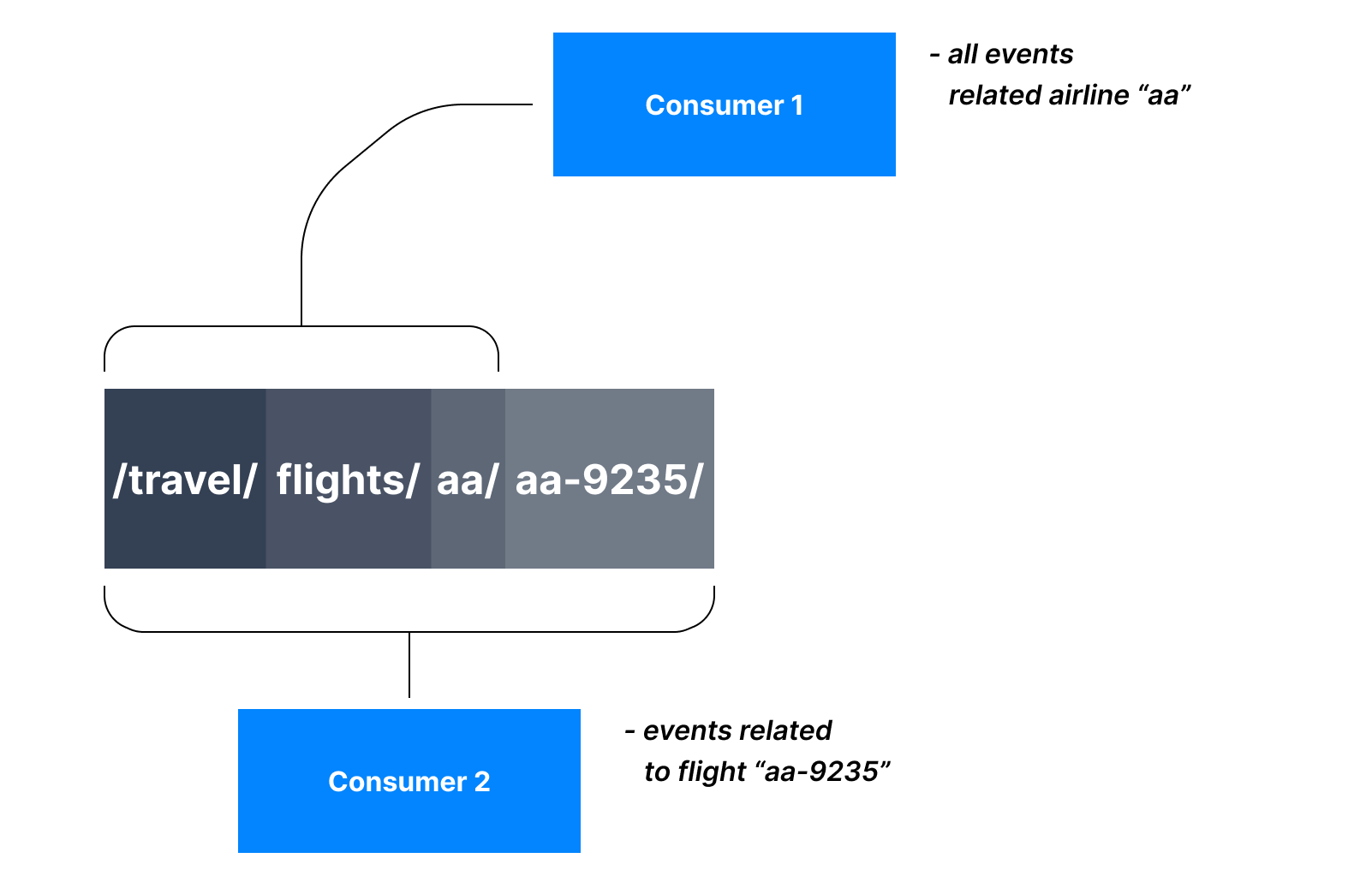

Message brokers like ActiveMQ and RabbitMQ offer hierarchical topics, giving consumers more subscription points and flexibility. A hierarchical topic is a structure representing a hierarchy such as /travel/flights/airline/flight-number/seat. Consumers can subscribe to different hierarchy levels with different values to select the events at a finer granularity. In addition, messaging pub/sub selectors can be used to further refine the events of interest.

For example, a consumer subscribing to /travel/flights/aa will receive any event related to American Airlines, whereas a subscription to /travel/flights/aa/aa-9235 delivers events for a specific flight.

ActiveMQ supports this with destination wildcards, a way of organizing events into hierarchies, and using wildcards for the easy subscription of the range of information interested. Apart from that, ActiveMQ supports JMS selectors, a feature allowing consumers to filter events at the broker level. RabbitMQ also leverages the features built into AMQP protocol, such as topic exchanges, bindings, and routing keys, to offer fine-grained subscriptions to topics and broker-level filtering.

Conversely, Kafka doesn’t offer that level of granular subscriptions to topics. A consumer reading from a topic must do the filtering at the consumer level.

Message ordering

Strict message ordering is critical for some use cases, such as financial transactions. Sometimes it can be helpful to ensure that every topic consumer sees messages arriving on the topic in the same order. However, ActiveMQ, RabbitMQ, and Kafka implement message ordering differently.

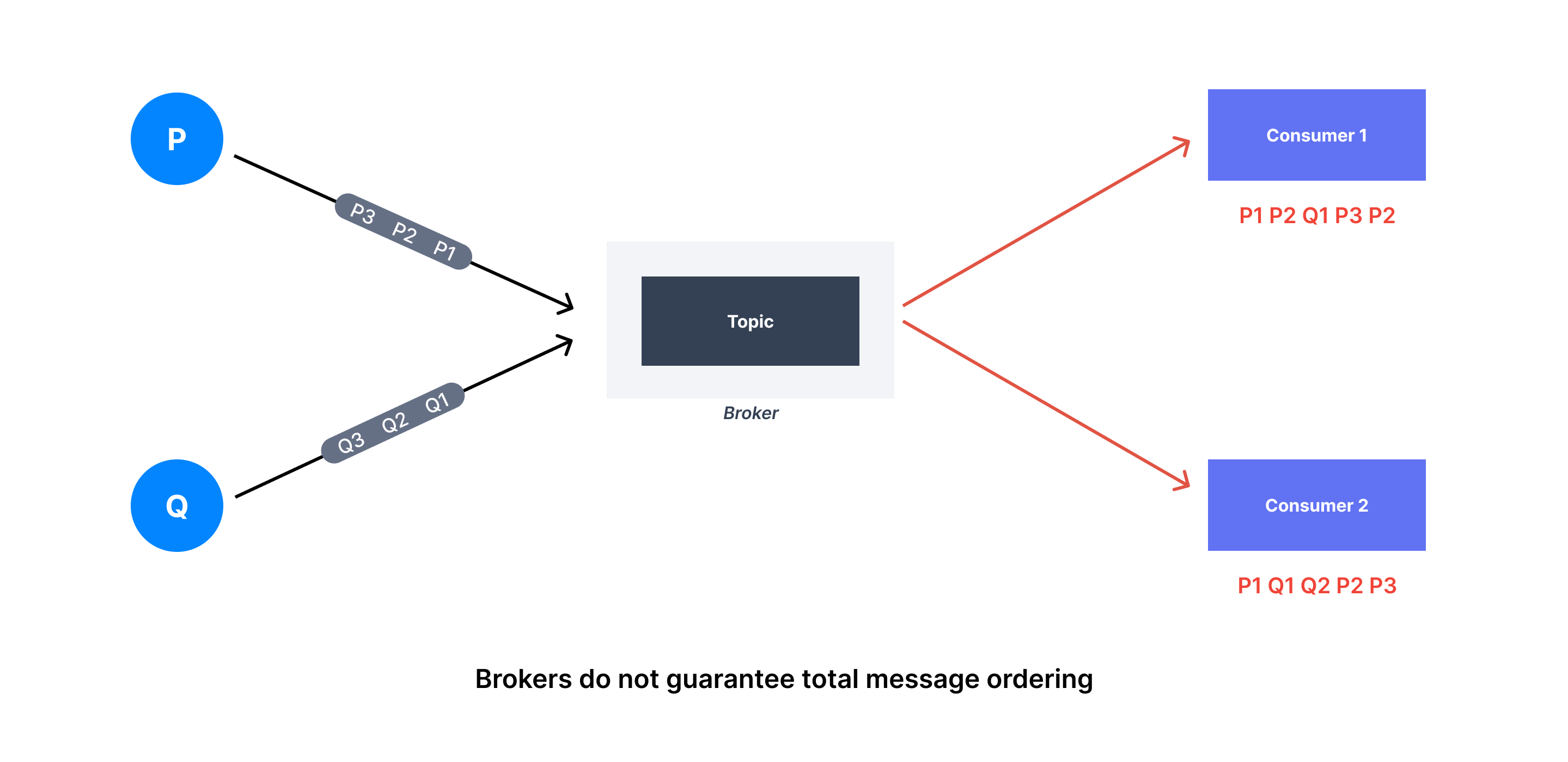

Brokers like ActiveMQ and RabbitMQ will guarantee the order of all messages sent by the same producer. However, because the broker uses multiple threads and asynchronous processing, messages from different producers could arrive to different consumers in different orders.

For example, assume two producers, P, and Q, simultaneously send messages to the same topic as follows.

Two different consumers could see messages arrive in the following order:

consumer1: P1 P2 Q1 P3 Q2

consumer2: P1 Q1 Q2 P2 P3

ActiveMQ offers total ordering destinations to preserve message ordering across all consumers for a given topic. Nevertheless, that results in a performance cost since greater synchronization is required.

However, Kafka has a different take on total message ordering. Kafka ensures that messages with the same partition key will always end up in the same partition regardless of the producer. Messages are written in a partition based on their arrival order, guaranteeing a strict ordering for messages with the same partition key.

Messaging protocols and interfaces

Messaging protocols provide interoperability between messaging clients and brokers, enabling clients to move across different brokers without code changes. JMS, AQMP, and MQTT are a few famous message protocols.

ActiveMQ is a multi-protocol broker with a pluggable protocol architecture. The current version supports protocols including AMQP, MQTT, STOP, and OpenWire. Although JMS and Jakarta Messaging are standardized APIs, they do not define a network protocol. The ActiveMQ Artemis JMS & Jakarta Messaging clients are implemented on top of the core protocol. In addition, ActiveMQ also provides a REST messaging interface.

RabbitMQ also supports several messaging protocols, directly and through plugins. RabbitMQ was initially developed to support AMQP 0-9-1. This protocol is the "core" protocol supported by the broker. Apart from that, RabbitMQ supports other protocols, including STOMP, MQTT, and other variants of MQTT.

Conversely, Kafka has its own wire protocol, implemented by languages including Java, Python, .NET, Node, etc. In addition, vendors like Confluent provide REST proxy, a RESTful interface to Kafka.

Conclusion

When it comes to enterprise workloads, three technologies provide equal security, scalability, and transactions capabilities. However, things get different when we narrow down specific use cases.

ActiveMQ and RabbitMQ have been established as enterprise message brokers, providing reliable delivery guarantees across business applications. They are configured to acknowledge each message by default and equipped with built-in recovery mechanisms like dead letter queues (DLQ) for reliability. Also, they provide interfaces to popular messaging protocols, allowing more integration points with existing enterprise systems.

Therefore, consider ActiveMQ and RabbitMQ when building enterprise applications that can’t tolerate losing a single message.

Kafka is an event streaming platform designed to meet different expectations. Kafka has the edge over former technologies in performance, message retention, and total ordering. Kafka’s write-ahead log (WAL) employs a sequential write principal, allowing low-latency and high throughput write performances at scale. Its partition-based design enables a higher read capacity and a strict ordering of messages. Also, Kafka’s storage can be extended for the long term (with tiered storage), thus, allowing you to access real-time and historical data from a single place.

Therefore, Kafka is ideal for building applications that demand more scalability, performance, message ordering, and longer retention periods. Transactional systems and data analytics applications are popular use cases for Kafka.

Streamline your Apache Kafka with a free trial of Conduktor.

We aim to accelerate Kafka projects delivery by making developers and organizations more efficient with Kafka.